BDS 761: Data Science and Machine Learning I

Topic 3: Introduction to Natural Language Processing

Custom GPT for this class¶

https://chatgpt.com/g/g-SSBhmwHol-introductory-data-science-tutor

Some ChatGPT chats¶

Machine Learning in Biostatistics https://chatgpt.com/share/66eb1538-74bc-800c-b1df-a8ce96b01b9a

Data Analyst GPT Differences https://chatgpt.com/share/66eb1fd9-0aac-800c-bbb6-cbf6936d157e

This topic:¶

- Small NLP packages

- NLP with LLMs

Reading:

- IBM: What is NLP (natural language processing)? https://www.ibm.com/topics/natural-language-processing

History¶

- Natural Language Processing is an old field of study in computer science and Artificial Intelligence research

- E.g. to make a program which can interact with people via natural language in text format

- Tasks range from basic data wrangling operations to advanced A.I.

- Many "canned" problems were posed for competitions and research

- Hardest major problems arguably solved very very recently by large language models

Canned problem examples¶

- Part-of-speech tagging

- Named entity recognition

- Sentiment analysis

- Machine Translation

Inlcudes some of the tasks we solved last class

NLP Python Packages¶

Small libraries to solve the easier NLP problems and related string operations

May include crude solutions for the harder problems (i.e. low-accuracy speech recognition)

- NLTK

- TextBlob

- SpaCy

Python Natural Language Toolkit (NLTK)¶

Natural Language Toolkit (nltk) is a Python package for NLP

Pros: Common, Functionality

Cons: academic, slow, Awkward API

import nltk

# help(nltk)

Download NLTK corpora (3.4GB)¶

#nltk.download('genesis')

#nltk.download('brown')

nltk.download('abc')

[nltk_data] Downloading package abc to [nltk_data] C:\Users\micro\AppData\Roaming\nltk_data... [nltk_data] Unzipping corpora\abc.zip.

True

from nltk.corpus import abc

dir(abc)

['_LazyCorpusLoader__args', '_LazyCorpusLoader__kwargs', '_LazyCorpusLoader__load', '_LazyCorpusLoader__name', '_LazyCorpusLoader__reader_cls', '__class__', '__delattr__', '__dict__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattr__', '__getattribute__', '__getstate__', '__gt__', '__hash__', '__init__', '__init_subclass__', '__le__', '__lt__', '__module__', '__name__', '__ne__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', '_unload', 'subdir']

print(abc.raw()[:500])

PM denies knowledge of AWB kickbacks The Prime Minister has denied he knew AWB was paying kickbacks to Iraq despite writing to the wheat exporter asking to be kept fully informed on Iraq wheat sales. Letters from John Howard and Deputy Prime Minister Mark Vaile to AWB have been released by the Cole inquiry into the oil for food program. In one of the letters Mr Howard asks AWB managing director Andrew Lindberg to remain in close contact with the Government on Iraq wheat sales. The Opposition's G

Tokenize¶

import nltk

help(nltk.tokenize)

Help on package nltk.tokenize in nltk:

NAME

nltk.tokenize - NLTK Tokenizer Package

DESCRIPTION

Tokenizers divide strings into lists of substrings. For example,

tokenizers can be used to find the words and punctuation in a string:

>>> from nltk.tokenize import word_tokenize

>>> s = '''Good muffins cost $3.88\nin New York. Please buy me

... two of them.\n\nThanks.'''

>>> word_tokenize(s) # doctest: +NORMALIZE_WHITESPACE

['Good', 'muffins', 'cost', '$', '3.88', 'in', 'New', 'York', '.',

'Please', 'buy', 'me', 'two', 'of', 'them', '.', 'Thanks', '.']

This particular tokenizer requires the Punkt sentence tokenization

models to be installed. NLTK also provides a simpler,

regular-expression based tokenizer, which splits text on whitespace

and punctuation:

>>> from nltk.tokenize import wordpunct_tokenize

>>> wordpunct_tokenize(s) # doctest: +NORMALIZE_WHITESPACE

['Good', 'muffins', 'cost', '$', '3', '.', '88', 'in', 'New', 'York', '.',

'Please', 'buy', 'me', 'two', 'of', 'them', '.', 'Thanks', '.']

We can also operate at the level of sentences, using the sentence

tokenizer directly as follows:

>>> from nltk.tokenize import sent_tokenize, word_tokenize

>>> sent_tokenize(s)

['Good muffins cost $3.88\nin New York.', 'Please buy me\ntwo of them.', 'Thanks.']

>>> [word_tokenize(t) for t in sent_tokenize(s)] # doctest: +NORMALIZE_WHITESPACE

[['Good', 'muffins', 'cost', '$', '3.88', 'in', 'New', 'York', '.'],

['Please', 'buy', 'me', 'two', 'of', 'them', '.'], ['Thanks', '.']]

Caution: when tokenizing a Unicode string, make sure you are not

using an encoded version of the string (it may be necessary to

decode it first, e.g. with ``s.decode("utf8")``.

NLTK tokenizers can produce token-spans, represented as tuples of integers

having the same semantics as string slices, to support efficient comparison

of tokenizers. (These methods are implemented as generators.)

>>> from nltk.tokenize import WhitespaceTokenizer

>>> list(WhitespaceTokenizer().span_tokenize(s)) # doctest: +NORMALIZE_WHITESPACE

[(0, 4), (5, 12), (13, 17), (18, 23), (24, 26), (27, 30), (31, 36), (38, 44),

(45, 48), (49, 51), (52, 55), (56, 58), (59, 64), (66, 73)]

There are numerous ways to tokenize text. If you need more control over

tokenization, see the other methods provided in this package.

For further information, please see Chapter 3 of the NLTK book.

PACKAGE CONTENTS

api

casual

destructive

legality_principle

mwe

nist

punkt

regexp

repp

sexpr

simple

sonority_sequencing

stanford

stanford_segmenter

texttiling

toktok

treebank

util

FUNCTIONS

sent_tokenize(text, language='english')

Return a sentence-tokenized copy of *text*,

using NLTK's recommended sentence tokenizer

(currently :class:`.PunktSentenceTokenizer`

for the specified language).

:param text: text to split into sentences

:param language: the model name in the Punkt corpus

word_tokenize(text, language='english', preserve_line=False)

Return a tokenized copy of *text*,

using NLTK's recommended word tokenizer

(currently an improved :class:`.TreebankWordTokenizer`

along with :class:`.PunktSentenceTokenizer`

for the specified language).

:param text: text to split into words

:type text: str

:param language: the model name in the Punkt corpus

:type language: str

:param preserve_line: A flag to decide whether to sentence tokenize the text or not.

:type preserve_line: bool

FILE

c:\users\micro\anaconda3\envs\rise_083124\lib\site-packages\nltk\tokenize\__init__.py

# nltk.download('punkt_tab') # <--- may need to do this first, see error

from nltk.tokenize import word_tokenize

s = '''Good muffins cost $3.88\nin New York. Please buy me two of them.\n\nThanks.'''

word_tokenize(s) # doctest: +NORMALIZE_WHITESPACE

['Good', 'muffins', 'cost', '$', '3.88', 'in', 'New', 'York', '.', 'Please', 'buy', 'me', 'two', 'of', 'them', '.', 'Thanks', '.']

from nltk.tokenize import word_tokenize

text1 = "It's true that the chicken was the best bamboozler in the known multiverse."

tokens = word_tokenize(text1)

print(tokens)

['It', "'s", 'true', 'that', 'the', 'chicken', 'was', 'the', 'best', 'bamboozler', 'in', 'the', 'known', 'multiverse', '.']

Stemming¶

Chopping off the ends of words.

from nltk import stem

porter = stem.porter.PorterStemmer()

porter.stem("cars")

'car'

porter.stem("octopus")

'octopu'

porter.stem("am")

'am'

"Stemmers"¶

There are 3 types of commonly used stemmers, and each consists of slightly different rules for systematically replacing affixes in tokens. In general, Lancaster stemmer stems the most aggresively, i.e. removing the most suffix from the tokens, followed by Snowball and Porter

Porter Stemmer:

- Most commonly used stemmer and the most gentle stemmers

- The most computationally intensive of the algorithms (Though not by a very significant margin)

- The oldest stemming algorithm in existence

Snowball Stemmer:

- Universally regarded as an improvement over the Porter Stemmer

- Slightly faster computation time than the Porter Stemmer

Lancaster Stemmer:

- Very aggressive stemming algorithm

- With Porter and Snowball Stemmers, the stemmed representations are usually fairly intuitive to a reader

- With Lancaster Stemmer, shorter tokens that are stemmed will become totally obfuscated

- The fastest algorithm and will reduce the vocabulary

- However, if one desires more distinction between tokens, Lancaster Stemmer is not recommended

from nltk import stem

tokens = ['player', 'playa', 'playas', 'pleyaz']

# Define Porter Stemmer

porter = stem.porter.PorterStemmer()

# Define Snowball Stemmer

snowball = stem.snowball.EnglishStemmer()

# Define Lancaster Stemmer

lancaster = stem.lancaster.LancasterStemmer()

print('Porter Stemmer:', [porter.stem(i) for i in tokens])

print('Snowball Stemmer:', [snowball.stem(i) for i in tokens])

print('Lancaster Stemmer:', [lancaster.stem(i) for i in tokens])

Porter Stemmer: ['player', 'playa', 'playa', 'pleyaz'] Snowball Stemmer: ['player', 'playa', 'playa', 'pleyaz'] Lancaster Stemmer: ['play', 'play', 'playa', 'pleyaz']

Lemmatization¶

https://www.nltk.org/api/nltk.stem.wordnet.html

WordNet Lemmatizer

Provides 3 lemmatizer modes: _morphy(), morphy() and lemmatize().

lemmatize() is a permissive wrapper around _morphy(). It returns the shortest lemma found in WordNet, or the input string unchanged if nothing is found.

Lemmatize word by picking the shortest of the possible lemmas, using the wordnet corpus reader’s built-in _morphy function. Returns the input word unchanged if it cannot be found in WordNet.

from nltk.stem import WordNetLemmatizer as wnl

print('WNL Lemmatization:',wnl().lemmatize('solution'))

print('Porter Stemmer:', porter.stem('solution'))

WNL Lemmatization: solution Porter Stemmer: solut

Edit distance¶

from nltk.metrics.distance import edit_distance

edit_distance('intention', 'execution')

5

Textblob¶

https://textblob.readthedocs.io/en/dev/

"Python library for processing textual data. It provides a simple API for diving into common natural language processing (NLP) tasks such as part-of-speech tagging, noun phrase extraction, sentiment analysis, classification, and more."

import textblob

# help(textblob)

from textblob import TextBlob

text1 = '''

It’s too bad that some of the young people that were killed over the weekend

didn’t have guns attached to their [hip],

frankly, where bullets could have flown in the opposite direction...

'''

text2 = '''

A President and "world-class deal maker," marveled Frida Ghitis, who demonstrates

with a "temper tantrum," that he can't make deals. Who storms out of meetings with

congressional leaders while insisting he's calm (and lines up his top aides to confirm it for the cameras).

'''

blob1 = TextBlob(text1)

blob2 = TextBlob(text2)

from nltk.corpus import abc

blob3 = TextBlob(abc.raw())

blob3.words[:50]

WordList(['PM', 'denies', 'knowledge', 'of', 'AWB', 'kickbacks', 'The', 'Prime', 'Minister', 'has', 'denied', 'he', 'knew', 'AWB', 'was', 'paying', 'kickbacks', 'to', 'Iraq', 'despite', 'writing', 'to', 'the', 'wheat', 'exporter', 'asking', 'to', 'be', 'kept', 'fully', 'informed', 'on', 'Iraq', 'wheat', 'sales', 'Letters', 'from', 'John', 'Howard', 'and', 'Deputy', 'Prime', 'Minister', 'Mark', 'Vaile', 'to', 'AWB', 'have', 'been', 'released'])

from textblob import Word

nltk.download('wordnet')

[nltk_data] Downloading package wordnet to [nltk_data] C:\Users\micro\AppData\Roaming\nltk_data... [nltk_data] Package wordnet is already up-to-date!

True

w = Word("cars")

w.lemmatize()

'car'

Word("octopi").lemmatize()

'octopus'

Word("am").lemmatize()

'am'

w = Word("litter")

w.definitions

['the offspring at one birth of a multiparous mammal', 'rubbish carelessly dropped or left about (especially in public places)', 'conveyance consisting of a chair or bed carried on two poles by bearers', 'material used to provide a bed for animals', 'strew', 'make a place messy by strewing garbage around', 'give birth to a litter of animals']

text = """A green hunting cap squeezed the top of the fleshy balloon of a head. The green earflaps, full of large ears and uncut hair and the fine bristles that grew in the ears themselves, stuck out on either side like turn signals indicating two directions at once. Full, pursed lips protruded beneath the bushy black moustache and, at their corners, sank into little folds filled with disapproval and potato chip crumbs. In the shadow under the green visor of the cap Ignatius J. Reilly’s supercilious blue and yellow eyes looked down upon the other people waiting under the clock at the D.H. Holmes department store, studying the crowd of people for signs of bad taste in dress. """

blob = TextBlob(text)

blob.sentences

[Sentence("A green hunting cap squeezed the top of the fleshy balloon of a head."),

Sentence("The green earflaps, full of large ears and uncut hair and the fine bristles that grew in the ears themselves, stuck out on either side like turn signals indicating two directions at once."),

Sentence("Full, pursed lips protruded beneath the bushy black moustache and, at their corners, sank into little folds filled with disapproval and potato chip crumbs."),

Sentence("In the shadow under the green visor of the cap Ignatius J. Reilly’s supercilious blue and yellow eyes looked down upon the other people waiting under the clock at the D.H. Holmes department store, studying the crowd of people for signs of bad taste in dress.")]

#blob3.word_counts

blob3.word_counts['the'],blob3.word_counts['and'],blob3.word_counts['people']

(41626, 14876, 1281)

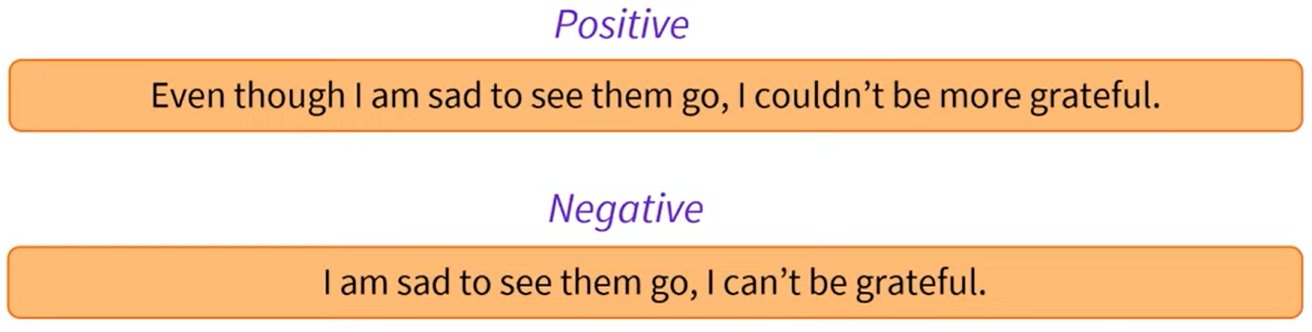

Sentiment Analysis¶

blob1.sentiment

Sentiment(polarity=-0.19999999999999996, subjectivity=0.26666666666666666)

blob2.sentiment

Sentiment(polarity=0.4, subjectivity=0.625)

# -1 = most negative, +1 = most positive

print(TextBlob("this is horrible").sentiment)

print(TextBlob("this is lame").sentiment)

print(TextBlob("this is awesome").sentiment)

print(TextBlob("this is x").sentiment)

Sentiment(polarity=-1.0, subjectivity=1.0) Sentiment(polarity=-0.5, subjectivity=0.75) Sentiment(polarity=1.0, subjectivity=1.0) Sentiment(polarity=0.0, subjectivity=0.0)

# Simple approaches to NLP tasks typically used keyword matching.

print(TextBlob("this is horrible").sentiment)

print(TextBlob("this is the totally not horrible").sentiment)

print(TextBlob("this was horrible").sentiment)

print(TextBlob("this was horrible but now isn't").sentiment)

Sentiment(polarity=-1.0, subjectivity=1.0) Sentiment(polarity=0.5, subjectivity=1.0) Sentiment(polarity=-1.0, subjectivity=1.0) Sentiment(polarity=-1.0, subjectivity=1.0)

SpaCy¶

https://github.com/explosion/spaCy

"spaCy is a library for advanced Natural Language Processing in Python and Cython. It's built on the very latest research, and was designed from day one to be used in real products."

"spaCy comes with pretrained pipelines and currently supports tokenization and training for 70+ languages. It features state-of-the-art speed and neural network models for tagging, parsing, named entity recognition, text classification and more, multi-task learning with pretrained transformers like BERT, as well as a production-ready training system and easy model packaging, deployment and workflow management. spaCy is commercial open-source software, released under the MIT license."

import spacy

help(spacy)

Help on package spacy:

NAME

spacy

PACKAGE CONTENTS

__main__

about

attrs

cli (package)

compat

displacy (package)

errors

git_info

glossary

kb (package)

lang (package)

language

lexeme

lookups

matcher (package)

ml (package)

morphology

parts_of_speech

pipe_analysis

pipeline (package)

schemas

scorer

strings

symbols

tests (package)

tokenizer

tokens (package)

training (package)

ty

util

vectors

vocab

FUNCTIONS

blank(name: str, *, vocab: Union[spacy.vocab.Vocab, bool] = True, config: Union[Dict[str, Any], confection.Config] = {}, meta: Dict[str, Any] = {}) -> spacy.language.Language

Create a blank nlp object for a given language code.

name (str): The language code, e.g. "en".

vocab (Vocab): A Vocab object. If True, a vocab is created.

config (Dict[str, Any] / Config): Optional config overrides.

meta (Dict[str, Any]): Overrides for nlp.meta.

RETURNS (Language): The nlp object.

load(name: Union[str, pathlib.Path], *, vocab: Union[spacy.vocab.Vocab, bool] = True, disable: Union[str, Iterable[str]] = [], enable: Union[str, Iterable[str]] = [], exclude: Union[str, Iterable[str]] = [], config: Union[Dict[str, Any], confection.Config] = {}) -> spacy.language.Language

Load a spaCy model from an installed package or a local path.

name (str): Package name or model path.

vocab (Vocab): A Vocab object. If True, a vocab is created.

disable (Union[str, Iterable[str]]): Name(s) of pipeline component(s) to disable. Disabled

pipes will be loaded but they won't be run unless you explicitly

enable them by calling nlp.enable_pipe.

enable (Union[str, Iterable[str]]): Name(s) of pipeline component(s) to enable. All other

pipes will be disabled (but can be enabled later using nlp.enable_pipe).

exclude (Union[str, Iterable[str]]): Name(s) of pipeline component(s) to exclude. Excluded

components won't be loaded.

config (Dict[str, Any] / Config): Config overrides as nested dict or dict

keyed by section values in dot notation.

RETURNS (Language): The loaded nlp object.

DATA

Dict = typing.Dict

A generic version of dict.

Iterable = typing.Iterable

A generic version of collections.abc.Iterable.

Union = typing.Union

Union type; Union[X, Y] means either X or Y.

On Python 3.10 and higher, the | operator

can also be used to denote unions;

X | Y means the same thing to the type checker as Union[X, Y].

To define a union, use e.g. Union[int, str]. Details:

- The arguments must be types and there must be at least one.

- None as an argument is a special case and is replaced by

type(None).

- Unions of unions are flattened, e.g.::

assert Union[Union[int, str], float] == Union[int, str, float]

- Unions of a single argument vanish, e.g.::

assert Union[int] == int # The constructor actually returns int

- Redundant arguments are skipped, e.g.::

assert Union[int, str, int] == Union[int, str]

- When comparing unions, the argument order is ignored, e.g.::

assert Union[int, str] == Union[str, int]

- You cannot subclass or instantiate a union.

- You can use Optional[X] as a shorthand for Union[X, None].

logger = <Logger spacy (WARNING)>

VERSION

3.7.2

FILE

c:\users\micro\anaconda3\envs\rise_083124\lib\site-packages\spacy\__init__.py

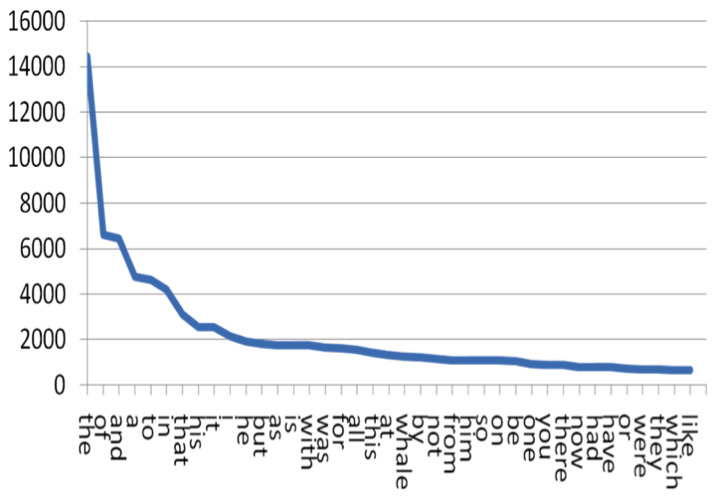

Activity: Zipf's Law¶

Zipf's law states that given a large sample of words used, the frequency of any word is inversely proportional to its rank in the frequency table. 2nd word is half as common as first word. Third word is 1/3 as common. Etc.

For example:

| Word | Rank | Frequency |

|---|---|---|

| “the” | 1st | 30k |

| "of" | 2nd | 15k |

| "and" | 3rd | 7.5k |

Plot word frequencies¶

from nltk.corpus import genesis

from collections import Counter

import matplotlib.pyplot as plt

%matplotlib inline

plt.plot(counts_sorted[:50]);

Does this confirm to Zipf Law? Why or Why not?

Activity Part 2: List the most common words¶

Activity Part 3: Remove punctuation¶

from string import punctuation

punctuation

'!"#$%&\'()*+,-./:;<=>?@[\\]^_`{|}~'

sample_clean = [item for item in sample if not item[0] in punctuation]

sample_clean

[('the', 4642),

('and', 4368),

('de', 3160),

('of', 2824),

('a', 2372),

('e', 2353),

('und', 2010),

('och', 1839),

('to', 1805),

('in', 1625)]

Activity Part 4: Null model¶

- Generate random text including the space character.

- Tokenize this string of gibberish

- Generate another plot of Zipf's

- Compare the two plots.

What do you make of Zipf's Law in the light of this?

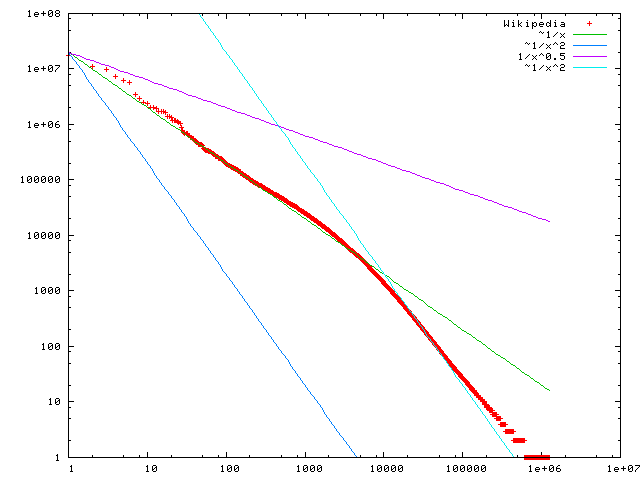

How does your result compare to Wikipedia?¶

Modern NLP A.I. tasks using HuggingFace transformer class¶

Install the Transformers, Datasets, and Evaluate libraries to run this notebook.

Installation (7/8/24)¶

This repository is tested on Python 3.8+, Flax 0.4.1+, PyTorch 1.11+, and TensorFlow 2.6+.

Virtual environments: https://docs.python.org/3/library/venv.html

Venv user guide: https://packaging.python.org/guides/installing-using-pip-and-virtual-environments/

- create a virtual environment with the version of Python you're going to use and activate it.

- install at least one of Flax, PyTorch, or TensorFlow. Please refer to TensorFlow installation page, PyTorch installation page and/or Flax and Jax installation pages regarding the specific installation command for your platform.

- install transformers using pip as follows:

pip install transformers

...or using conda...

conda install conda-forge::transformers

NOTE: Installing transformers from the huggingface channel is deprecated.

Note 7/8/24: got error when importing:

ImportError: huggingface-hub>=0.23.2,<1.0 is required for a normal functioning of this module, but found huggingface-hub==0.23.1.When installing conda-forge::transformers above it also installed huggingface_hub-0.23.1-py310haa95532_0 as a dependency. However if first run:

conda install conda-forge::huggingface_hubIt installs huggingface_hub-0.23.4. After this, can install conda-forge::transformers and import works without error.

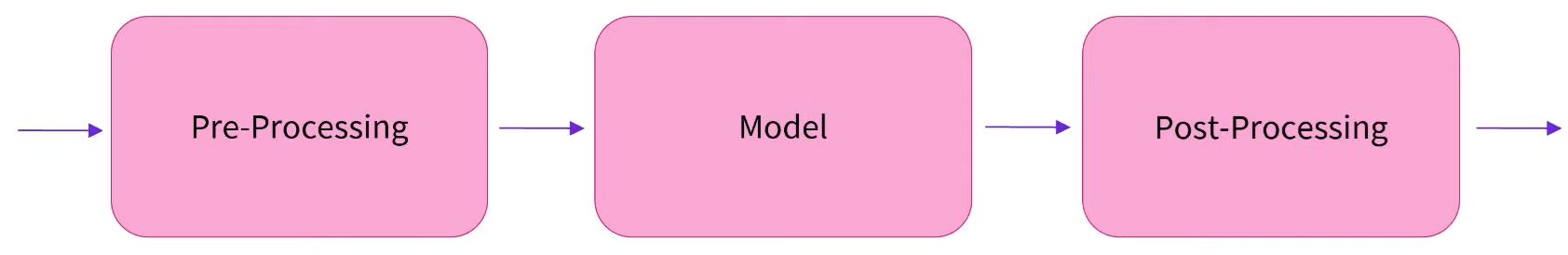

Hugging Face Pipelines¶

Base class implementing NLP operations. Pipeline workflow is defined as a sequence of the following operations:

- A tokenizer in charge of mapping raw textual input to token.

- A model to make predictions from the inputs.

- Some (optional) post processing for enhancing model’s output.

https://huggingface.co/docs/transformers/en/main_classes/pipelines

from transformers import pipeline

# first indicate ask. Model optional.

pipe = pipeline("text-classification", model="FacebookAI/roberta-large-mnli")

pipe("This restaurant is awesome")

[{'label': 'NEUTRAL', 'score': 0.7313136458396912}]

Sentiment analysis¶

classifier = pipeline("sentiment-analysis", model="distilbert/distilbert-base-uncased-finetuned-sst-2-english")

All PyTorch model weights were used when initializing TFDistilBertForSequenceClassification. All the weights of TFDistilBertForSequenceClassification were initialized from the PyTorch model. If your task is similar to the task the model of the checkpoint was trained on, you can already use TFDistilBertForSequenceClassification for predictions without further training.

classifier("I've been waiting for a HuggingFace course my whole life.")

[{'label': 'POSITIVE', 'score': 0.9598046541213989}]

classifier("I've been waiting for a HuggingFace course my whole life.")

[{'label': 'POSITIVE', 'score': 0.9598046541213989}]

classifier("I hate this so much!")

[{'label': 'NEGATIVE', 'score': 0.9994558691978455}]

"Zero-shot-classification"¶

classifier = pipeline("zero-shot-classification")

classifier(

"This is a course about the Transformers library",

candidate_labels=["education", "politics", "business"],

)

No model was supplied, defaulted to FacebookAI/roberta-large-mnli and revision 130fb28 (https://huggingface.co/FacebookAI/roberta-large-mnli). Using a pipeline without specifying a model name and revision in production is not recommended.

config.json: 0%| | 0.00/688 [00:00<?, ?B/s]

C:\Users\micro\anaconda3\envs\HF_070824\lib\site-packages\huggingface_hub\file_download.py:157: UserWarning: `huggingface_hub` cache-system uses symlinks by default to efficiently store duplicated files but your machine does not support them in C:\Users\micro\.cache\huggingface\hub\models--FacebookAI--roberta-large-mnli. Caching files will still work but in a degraded version that might require more space on your disk. This warning can be disabled by setting the `HF_HUB_DISABLE_SYMLINKS_WARNING` environment variable. For more details, see https://huggingface.co/docs/huggingface_hub/how-to-cache#limitations. To support symlinks on Windows, you either need to activate Developer Mode or to run Python as an administrator. In order to see activate developer mode, see this article: https://docs.microsoft.com/en-us/windows/apps/get-started/enable-your-device-for-development warnings.warn(message)

model.safetensors: 0%| | 0.00/1.43G [00:00<?, ?B/s]

All PyTorch model weights were used when initializing TFRobertaForSequenceClassification. All the weights of TFRobertaForSequenceClassification were initialized from the PyTorch model. If your task is similar to the task the model of the checkpoint was trained on, you can already use TFRobertaForSequenceClassification for predictions without further training.

tokenizer_config.json: 0%| | 0.00/25.0 [00:00<?, ?B/s]

vocab.json: 0%| | 0.00/899k [00:00<?, ?B/s]

merges.txt: 0%| | 0.00/456k [00:00<?, ?B/s]

tokenizer.json: 0%| | 0.00/1.36M [00:00<?, ?B/s]

{'sequence': 'This is a course about the Transformers library',

'labels': ['education', 'business', 'politics'],

'scores': [0.9562344551086426, 0.02697223611176014, 0.016793379560112953]}

Text generation¶

generator = pipeline("text-generation")

generator("In this course, we will teach you how to")

No model was supplied, defaulted to openai-community/gpt2 and revision 6c0e608 (https://huggingface.co/openai-community/gpt2). Using a pipeline without specifying a model name and revision in production is not recommended.

config.json: 0%| | 0.00/665 [00:00<?, ?B/s]

C:\Users\micro\anaconda3\envs\HF_070824\lib\site-packages\huggingface_hub\file_download.py:157: UserWarning: `huggingface_hub` cache-system uses symlinks by default to efficiently store duplicated files but your machine does not support them in C:\Users\micro\.cache\huggingface\hub\models--openai-community--gpt2. Caching files will still work but in a degraded version that might require more space on your disk. This warning can be disabled by setting the `HF_HUB_DISABLE_SYMLINKS_WARNING` environment variable. For more details, see https://huggingface.co/docs/huggingface_hub/how-to-cache#limitations. To support symlinks on Windows, you either need to activate Developer Mode or to run Python as an administrator. In order to see activate developer mode, see this article: https://docs.microsoft.com/en-us/windows/apps/get-started/enable-your-device-for-development warnings.warn(message)

model.safetensors: 0%| | 0.00/548M [00:00<?, ?B/s]

All PyTorch model weights were used when initializing TFGPT2LMHeadModel. All the weights of TFGPT2LMHeadModel were initialized from the PyTorch model. If your task is similar to the task the model of the checkpoint was trained on, you can already use TFGPT2LMHeadModel for predictions without further training.

tokenizer_config.json: 0%| | 0.00/26.0 [00:00<?, ?B/s]

vocab.json: 0%| | 0.00/1.04M [00:00<?, ?B/s]

merges.txt: 0%| | 0.00/456k [00:00<?, ?B/s]

tokenizer.json: 0%| | 0.00/1.36M [00:00<?, ?B/s]

Setting `pad_token_id` to `eos_token_id`:50256 for open-end generation.

[{'generated_text': "In this course, we will teach you how to do so, using techniques and techniques designed to assist in your own creative process and your own goals.\n\nHow do I know it's going to work?\n\nThere are many ways people say"}]

generator = pipeline("text-generation", model="distilgpt2")

generator(

"In this course, we will teach you how to",

max_length=30,

num_return_sequences=2,

)

All PyTorch model weights were used when initializing TFGPT2LMHeadModel. All the weights of TFGPT2LMHeadModel were initialized from the PyTorch model. If your task is similar to the task the model of the checkpoint was trained on, you can already use TFGPT2LMHeadModel for predictions without further training. Truncation was not explicitly activated but `max_length` is provided a specific value, please use `truncation=True` to explicitly truncate examples to max length. Defaulting to 'longest_first' truncation strategy. If you encode pairs of sequences (GLUE-style) with the tokenizer you can select this strategy more precisely by providing a specific strategy to `truncation`. Setting `pad_token_id` to `eos_token_id`:50256 for open-end generation.

[{'generated_text': 'In this course, we will teach you how to practice the magic of magic and how to do this.\n\n\nA big part of this course'},

{'generated_text': 'In this course, we will teach you how to build your own self-defense systems. The first step is to create the first self-defense system'}]

unmasker = pipeline("fill-mask")

unmasker("This course will teach you all about <mask> models.", top_k=2)

No model was supplied, defaulted to distilbert/distilroberta-base and revision ec58a5b (https://huggingface.co/distilbert/distilroberta-base). Using a pipeline without specifying a model name and revision in production is not recommended. All PyTorch model weights were used when initializing TFRobertaForMaskedLM. All the weights of TFRobertaForMaskedLM were initialized from the PyTorch model. If your task is similar to the task the model of the checkpoint was trained on, you can already use TFRobertaForMaskedLM for predictions without further training.

[{'score': 0.19619587063789368,

'token': 30412,

'token_str': ' mathematical',

'sequence': 'This course will teach you all about mathematical models.'},

{'score': 0.040526941418647766,

'token': 38163,

'token_str': ' computational',

'sequence': 'This course will teach you all about computational models.'}]

Named entity recognition¶

ner = pipeline("ner", grouped_entities=True)

ner("My name is Sylvain and I work at Hugging Face in Brooklyn.")

No model was supplied, defaulted to dbmdz/bert-large-cased-finetuned-conll03-english and revision f2482bf (https://huggingface.co/dbmdz/bert-large-cased-finetuned-conll03-english). Using a pipeline without specifying a model name and revision in production is not recommended.

config.json: 0%| | 0.00/998 [00:00<?, ?B/s]

model.safetensors: 0%| | 0.00/1.33G [00:00<?, ?B/s]

All PyTorch model weights were used when initializing TFBertForTokenClassification. All the weights of TFBertForTokenClassification were initialized from the PyTorch model. If your task is similar to the task the model of the checkpoint was trained on, you can already use TFBertForTokenClassification for predictions without further training.

tokenizer_config.json: 0%| | 0.00/60.0 [00:00<?, ?B/s]

vocab.txt: 0%| | 0.00/213k [00:00<?, ?B/s]

C:\Users\micro\anaconda3\envs\HF_070824\lib\site-packages\transformers\pipelines\token_classification.py:168: UserWarning: `grouped_entities` is deprecated and will be removed in version v5.0.0, defaulted to `aggregation_strategy="simple"` instead. warnings.warn(

[{'entity_group': 'PER',

'score': 0.9981694,

'word': 'Sylvain',

'start': 11,

'end': 18},

{'entity_group': 'ORG',

'score': 0.9796019,

'word': 'Hugging Face',

'start': 33,

'end': 45},

{'entity_group': 'LOC',

'score': 0.9932106,

'word': 'Brooklyn',

'start': 49,

'end': 57}]

Question answering¶

question_answerer = pipeline("question-answering")

question_answerer(

question="Where do I work?",

context="My name is Sylvain and I work at Hugging Face in Brooklyn",

)

No model was supplied, defaulted to distilbert/distilbert-base-cased-distilled-squad and revision 626af31 (https://huggingface.co/distilbert/distilbert-base-cased-distilled-squad). Using a pipeline without specifying a model name and revision in production is not recommended.

config.json: 0%| | 0.00/473 [00:00<?, ?B/s]

model.safetensors: 0%| | 0.00/261M [00:00<?, ?B/s]

All PyTorch model weights were used when initializing TFDistilBertForQuestionAnswering. All the weights of TFDistilBertForQuestionAnswering were initialized from the PyTorch model. If your task is similar to the task the model of the checkpoint was trained on, you can already use TFDistilBertForQuestionAnswering for predictions without further training.

tokenizer_config.json: 0%| | 0.00/49.0 [00:00<?, ?B/s]

vocab.txt: 0%| | 0.00/213k [00:00<?, ?B/s]

tokenizer.json: 0%| | 0.00/436k [00:00<?, ?B/s]

{'score': 0.6949759125709534, 'start': 33, 'end': 45, 'answer': 'Hugging Face'}

question_answerer(

question="How many years old am I?",

context="I was both in 1990. This is 2023. Hello.",

)

{'score': 0.8601788878440857, 'start': 28, 'end': 32, 'answer': '2023'}

Summarization¶

summarizer = pipeline("summarization")

summarizer(

"""

America has changed dramatically during recent years. Not only has the number of

graduates in traditional engineering disciplines such as mechanical, civil,

electrical, chemical, and aeronautical engineering declined, but in most of

the premier American universities engineering curricula now concentrate on

and encourage largely the study of engineering science. As a result, there

are declining offerings in engineering subjects dealing with infrastructure,

the environment, and related issues, and greater concentration on high

technology subjects, largely supporting increasingly complex scientific

developments. While the latter is important, it should not be at the expense

of more traditional engineering.

Rapidly developing economies such as China and India, as well as other

industrial countries in Europe and Asia, continue to encourage and advance

the teaching of engineering. Both China and India, respectively, graduate

six and eight times as many traditional engineers as does the United States.

Other industrial countries at minimum maintain their output, while America

suffers an increasingly serious decline in the number of engineering graduates

and a lack of well-educated engineers.

"""

)

No model was supplied, defaulted to sshleifer/distilbart-cnn-12-6 and revision a4f8f3e (https://huggingface.co/sshleifer/distilbart-cnn-12-6). Using a pipeline without specifying a model name and revision in production is not recommended.

config.json: 0%| | 0.00/1.80k [00:00<?, ?B/s]

pytorch_model.bin: 0%| | 0.00/1.22G [00:00<?, ?B/s]

tokenizer_config.json: 0%| | 0.00/26.0 [00:00<?, ?B/s]

vocab.json: 0%| | 0.00/899k [00:00<?, ?B/s]

merges.txt: 0%| | 0.00/456k [00:00<?, ?B/s]

[{'summary_text': ' America has changed dramatically during recent years . The number of engineering graduates in the U.S. has declined in traditional engineering disciplines such as mechanical, civil, electrical, chemical, and aeronautical engineering . Rapidly developing economies such as China and India continue to encourage and advance the teaching of engineering .'}]

Machine Translation¶

from transformers import pipeline

translator = pipeline("translation", model="Helsinki-NLP/opus-mt-fr-en")

translator("Ce cours est produit par Hugging Face.")

pytorch_model.bin: 0%| | 0.00/301M [00:00<?, ?B/s]

--------------------------------------------------------------------------- ValueError Traceback (most recent call last) Cell In[5], line 3 1 from transformers import pipeline ----> 3 translator = pipeline("translation", model="Helsinki-NLP/opus-mt-fr-en") 4 translator("Ce cours est produit par Hugging Face.") File ~\anaconda3\envs\HF_070824\lib\site-packages\transformers\pipelines\__init__.py:994, in pipeline(task, model, config, tokenizer, feature_extractor, image_processor, framework, revision, use_fast, token, device, device_map, torch_dtype, trust_remote_code, model_kwargs, pipeline_class, **kwargs) 991 tokenizer_kwargs = model_kwargs.copy() 992 tokenizer_kwargs.pop("torch_dtype", None) --> 994 tokenizer = AutoTokenizer.from_pretrained( 995 tokenizer_identifier, use_fast=use_fast, _from_pipeline=task, **hub_kwargs, **tokenizer_kwargs 996 ) 998 if load_image_processor: 999 # Try to infer image processor from model or config name (if provided as str) 1000 if image_processor is None: File ~\anaconda3\envs\HF_070824\lib\site-packages\transformers\models\auto\tokenization_auto.py:913, in AutoTokenizer.from_pretrained(cls, pretrained_model_name_or_path, *inputs, **kwargs) 911 return tokenizer_class_py.from_pretrained(pretrained_model_name_or_path, *inputs, **kwargs) 912 else: --> 913 raise ValueError( 914 "This tokenizer cannot be instantiated. Please make sure you have `sentencepiece` installed " 915 "in order to use this tokenizer." 916 ) 918 raise ValueError( 919 f"Unrecognized configuration class {config.__class__} to build an AutoTokenizer.\n" 920 f"Model type should be one of {', '.join(c.__name__ for c in TOKENIZER_MAPPING.keys())}." 921 ) ValueError: This tokenizer cannot be instantiated. Please make sure you have `sentencepiece` installed in order to use this tokenizer.

Bias in pretrained models¶

Historic and stereotypical outputs often statistically most likely in the large datasets used ~ data scraped from the internet or past decades of books

BERT trained on English Wikipedia and BookCorpus datasets

from transformers import pipeline

unmasker = pipeline("fill-mask", model="bert-base-uncased")

result1 = unmasker("This man works as a [MASK].")

print('Man:',[r["token_str"] for r in result1])

result2 = unmasker("This woman works as a [MASK].")

print('Woman:',[r["token_str"] for r in result2])

Some weights of the model checkpoint at bert-base-uncased were not used when initializing BertForMaskedLM: ['bert.pooler.dense.bias', 'bert.pooler.dense.weight', 'cls.seq_relationship.bias', 'cls.seq_relationship.weight'] - This IS expected if you are initializing BertForMaskedLM from the checkpoint of a model trained on another task or with another architecture (e.g. initializing a BertForSequenceClassification model from a BertForPreTraining model). - This IS NOT expected if you are initializing BertForMaskedLM from the checkpoint of a model that you expect to be exactly identical (initializing a BertForSequenceClassification model from a BertForSequenceClassification model).

Man: ['carpenter', 'lawyer', 'farmer', 'businessman', 'doctor'] Woman: ['nurse', 'maid', 'teacher', 'waitress', 'prostitute']

scores1 = [r['score'] for r in result1]

labels1 = [r["token_str"] for r in result1]

scores2 = [r['score'] for r in result2]

labels2 = [r["token_str"] for r in result2]

from matplotlib.pyplot import *

figure(figsize = (5,2))

bar(labels1, scores1);

title('Men');

figure(figsize = (5,2))

bar(labels2, scores2);

title('Women');

result1

[{'score': 0.0751064345240593,

'token': 10533,

'token_str': 'carpenter',

'sequence': 'this man works as a carpenter.'},

{'score': 0.0464191772043705,

'token': 5160,

'token_str': 'lawyer',

'sequence': 'this man works as a lawyer.'},

{'score': 0.03914564475417137,

'token': 7500,

'token_str': 'farmer',

'sequence': 'this man works as a farmer.'},

{'score': 0.03280140459537506,

'token': 6883,

'token_str': 'businessman',

'sequence': 'this man works as a businessman.'},

{'score': 0.02929229475557804,

'token': 3460,

'token_str': 'doctor',

'sequence': 'this man works as a doctor.'}]